By Rachel Roumeliotis, Director, Open Source Strategy at Intel

OPEA 1.1 is a powerhouse of a release on the heals of the major OPEA 1.0 release which dropped in September. We have big news in hardware with several of our end-to-end GenAI examples now deployable AMD® GPUs using AMD® ROCm™ – CodeTrans, CodeGen, FaqGen, DocSum, ChatQnA with more to come. Intel® Arc™ GPU: vLLM powered by OpenVINO can perform optimal model serving on Intel® Arc™ GPU. And, of course we have newly supported models: llama-3.2 (1B/3B/11B/90B), glm-4-9b-chat, and Qwen2/2.5 (7B/32B/72B). That is just the beginning read on for more on new examples, components, infrastructure and benchmarks along with news of our first Powered by OPEA solutions.

OPEA 1.1 Release Highlights

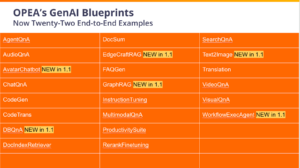

OPEA 1.1 brings six new end-to-end GenAI examples! Expect this list to keep growing in 2025.

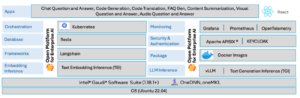

The 1.1 release supports new GenAI capabilities: Image-to-Video and Text-to-Image with a Stable Diffusion microservice, Text-to-SQL, Text-to-Speech with a GPT-SoVITS microservice, Avatar Animation, and GraphRAG with a llamaindex microservice. We also have asynchronous support for microservices, added multimedia support, and added vLLM backends for summarization, FAQ generation, code generation, and Agents all of which you can see in the GenAIComps repo.

OPEA 1.1 also introduces, GenAI Studio, a no-code/low-code application that can create end-to-end solutions inclusive of building, testing, evaluation, performance benchmarking, and deployment. Enhanced observability tools offer real-time insights into component performance and system resource utilization. Key system metrics can now be monitored with Grafana. Helm chart support is expanded with newly supported examples including AgentQnA, AudioQnA, FaqGen, VisualQnA and microservices including chathistory, mongodb, prompt, and Milvus for data-prep and retriever. And, finally, two benchmark kits have been added HELMET and LongBench.

Take a look at the official release notes for all the details.

‘Powered by OPEA’ Projects

We are also able to share announcements about the very first ‘Powered by OPEA’ projects. First, we have a Turnkey Generative AI solution with Intel® Gaudi® 3 AI accelerators, the Dell PowerEdge XE9680 platform, and OPEA.

And just announced H3C’s AIGC LinSeer, the first application of the new Intel® Enterprise AI Solution offering. It is an integrated software and hardware solution designed for a wide range of enterprise AI application scenarios including education, energy, and healthcare demonstrating sensitive recognition, rapid response capabilities, and high accuracy in both enterprise and medical application scenarios.

Are you working on a solution that is or could be ‘Powered by OPEA’ such as:

- a custom Proof of Concept (PoC) or use case using OPEA

- a customer-driven solution that have hosted/managed services using OPEA

- OPEA documentation or code for a node and/or Kubernetes cluster on-prem or Cloud Service Providers (CSPs)

- a new custom End-to-End GenAI solution as may appear as hosted services via CSP marketplaces

We’d love to hear about it and we’d love to help! Please let us know when you use the logo with a quick email to info@opea.dev

‘Powered by OPEA’ logo and guidelines for its use can be found here.

Expect the same furious pace in 2025!

Want to stay up to date on OPEA? Join our mailing list by visiting OPEA.dev